Perplexity AI “Uncensors” DeepSeek R1: Who Decides AI’s Boundaries?

In a movement that has caught the attention of many, Perflexity AI has released a new version of a popular open-source language model that removes built-in Chinese censorship. This modified model, dubbed R1 1776 (a name that evokes the spirit of independence), is based on the Chinese developed Deepseek R1. The original Deepseek R1 made waves reportedly competing for its strong reasoning opportunities with models with top level against a fraction of the cost but it came with a considerable limitation: it refused to tackle certain sensitive topics.

Why does this matter?

It raises crucial questions about AI supervision, bias, openness and the role of geopolitics in AI systems. This article is investigating what exactly is stunned, the implications of disconnecting the model and how it fits into the larger conversation about AI transparency and censorship.

What happened: Deepseek R1 goes uncensored

Deepseek R1 is an open weight large language model that originated in China and Have known because of the excellent reasoning options – Even approach the performance of leading models – while they are more computational more efficiently. However, users quickly noticed a grill: if questions about the topics that are sensitive in China (for example, political controversies or historical events considered by authorities as taboo), which considered the depth of the authorities), Deepseek R1 would not answer directly. Instead, the statements or outright refusal, approved by the State, reacted with the rules of Chinese government scenure. This built -in bias limited the usefulness of the model for those who are looking for frank or nuanced discussions about those topics.

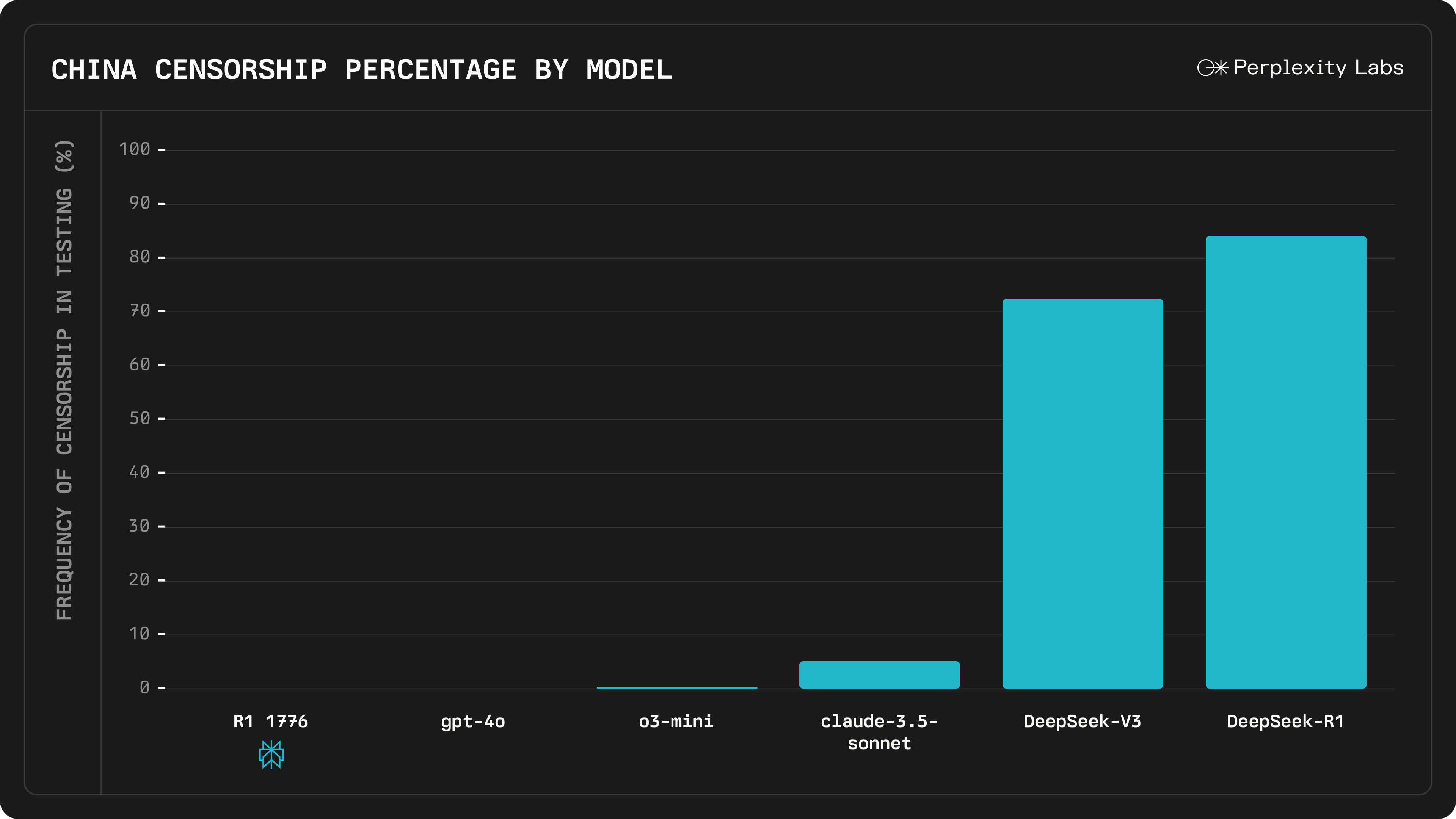

The solution of Pertlexity AI was to “decensor” the model through an extensive process after training. The company collected a large dataset of 40,000 multilingual promises about questions that Deepseek R1 avoids earlier or has been answered earnings. With the help of human experts, they identified around 300 sensitive topics where the original model tended to do the party line. For every such prompt, the team has put in factual, well -reasoned answers in several languages. These efforts that have been introduced in a multilingual cension detection and correction system, essentially the model learning how to recognize when the political censorship applied and instead responded with an informative answer. After this special refinement (that perplexity nicknamed “R1 1776” to emphasize the freedom theme), the model was made available openly. PerTlexity claims to have eliminated the Chinese censorship filters and prejudices from the answers of Deepsek R1, without changing the core capacities differently.

It is crucial that R1 1776 behaves very differently on previously taboo questions. PerTlexity gave an example with a search about the independence of Taiwan and its potential impact on the stock price of NVIDIA -a politically sensitive subject that touches on China -Taiwan relationships. The original Deepseek R1 avoided the question and answered with CCP-excited commonplaces. R1 1776, on the other hand, provides a detailed, candid assessment: it discusses concrete geopolitical and economic risks (disturbances of supply chain, market volatility, possibly conflict, etc.) that could influence the shares of Nvidia.

Due to Open-Sourcing R1 1776, PerTlexity also made the weights and changes in the model transparent for the community. Developers and researchers can do that Download it from hugging And even integrating via API, so that the removal of censorship can be investigated and built by others.

(Source: Perplexity AI)

Implications of removing the censorship

The decision of Pertlexity AI to remove the Chinese censorship from Deepseek R1 has several important implications for the AI community:

- Improved openness and truthfulness: Users of R1 1776 can now receive uncensored, direct answers to earlier off-limits topics, which is a victory for open research. This can make it a more reliable assistant for researchers, students or someone who is curious about sensitive geopolitical questions. It is a concrete example of the use of Open-source AI to prevent information release.

- Maintained performance: There were ensure that adjusting the model to remove censorship could deteriorate his performance in other areas. However, PerTlexity reports that the core skills of R1 1776 – such as mathematics and logical reasoning – remain on the same footing with the original model. In tests on more than 1,000 examples for a wide range of sensitive questions, the model turned out to be “completely uncensored”, while the same level of reasoning accuracy was held as Deepseek R1. This suggests that Bias Removal (at least in this case) was not at the expense of overall intelligence or capacity, which is an encouraging sign for similar efforts in the future.

- Positive reception of the community and cooperation: By opening the decensor model, PerTlexity invites the AI community to inspect and improve their work. It shows an obligation to transparency – the AI equivalent of showing someone’s work. Enthusiasts and developers can verify that the censorship restrictions are really gone and possibly contribute to further refinements. This promotes trust and cooperation innovation in an industry where closed models and hidden moderation rules are common.

- Ethical and geopolitical considerations: On the other hand, the complete removal of censorship raises complex ethical questions. Immediate care is how this uncensored model can be used In contexts where the censored subjects are illegal or dangerous. For example, if someone on mainland China were to use R1 1776, the uncensored answers from the model about Tiananmen Square or Taiwan could jeopardize the user. There is also the wider geopolitical signal: an American company that changes a Chinese-origin model to defy Chinese censorship can be seen as a bold ideological attitude. The name “1776” underlines a theme of liberation, which has not gone unnoticed. Some critics claim that It is possible to replace one set of prejudices with another – In essence, ask whether the model could now reflect a Western point of view in sensitive areas. The debate emphasizes that censorship versus openness in AI is not only a technical issue, but a political and ethical issue. Where a person sees necessary moderationAnother sees censorshipAnd finding the right balance is difficult.

The removal of censorship is largely celebrated as a step in the direction of more transparent and worldwide useful AI models, but it also serves as a reminder that what an AI should have to Say is a sensitive question without a universal agreement.

(Source: Perplexity AI)

The larger picture: AI-censorship and open-source transparency

The R1 1776 launch of Pertlexity comes at a time when the AI community is struggling with questions about how models deal with controversial content. Censorship in AI models can come from many places. In China, Technology companies are obliged to build in strict filters And even hard encrypted reactions for politically sensitive topics. Deepseek R1 is a good example of it here was an open-source model, but it clearly wore the print of China’s censorship standards in his training and refinement. On the other hand, many Western developed models, such as OpenAI’s GPT-4 or Meta’s Llama, are not mandatory for CCP guidelines, but they still have moderation layers (for things such as hate sowing, violence or disinformation) that some users call ‘censorship. “The line between reasonable moderation And unwanted censorship Can be blurry and often depends on a cultural or political perspective.

What perplexity AI did with Deepseek R1 increases the idea that open-source models can be adjusted to different value systems or regulatory environments. In theory one could make multiple versions of a model: one that meets Chinese regulations (for use in China), and another that is fully open (for use elsewhere). R1 1776 is essentially the latter case – an uncensored fork intended for a global audience that prefers unfiltered answers. This type of forks is only possible because the weights of Deepseek R1 were openly available. It emphasizes the benefit of Open-Source in AI: transparency. Everyone can take the model and adjust it, whether he must add guarantees or, as in this case, to remove imposed restrictions. Open purchase of the training data, code or weights of the model also means that the community can audit how the model has changed. (Perplexity did not fully announce all data sources that used for de censoring, but by releasing the model itself, they have enabled others to observe his behavior and even train it if necessary.)

This event also nods to the wider geopolitical dynamics of AI development. We see a form of dialogue (or confrontation) between different management models for AI. A model developed by Chinese with certain plastered world images is taken by a team established in the US and amended to display a more open information ethos. It is proof of how Global and limitless AI technology is: researchers can build on anywhere on each other’s work, but they are not obliged to transfer the original limitations. In the course of time we may see more authorities of this – where models are “translated” or adapted between different cultural contexts. It raises the question of whether AI can ever be universal, or whether we will end with region -specific versions that adhere to local standards. Transparency and openness offer one path to navigate this: if all parties can inspect the models, the conversation about bias and censorship is in the open air instead of hidden behind companies or government secret.

Finally, the Movement of Penclexity underlines an important point in the debate on AI control: Who can decide what an AI can or cannot say? This power is decentralized in open-source projects. The community – or individual developers – can decide to implement or relax stricter filters. In the case of R1 1776, Pertlexity decided that the benefits of an uncensored model outweigh the risks, and they had the freedom to make that call and share the result publicly. It is a daring example of the type of experiment that makes AI development possible.