AI Struggles to Emulate Historical Language

A collaboration between researchers in the United States and Canada has found that large language models (LLMs) such as ChatGPT struggle to reproduce historical idioms without extensive pretraining – a costly and labor-intensive process that lies beyond the means of most academic or entertainment initiatives, making projects such as completing Charles Dickens’s final, unfinished novel effectively through AI an unlikely proposition.

The researchers explored a range of methods for generating text that sounded historically accurate, starting with simple prompting using early twentieth-century prose, and moving to fine-tuning a commercial model on a small collection of books from that period.

They also compared the results to a separate model that had been trained entirely on books published between 1880 and 1914.

In the first of the tests, instructing ChatGPT-4o to mimic fin‑de‑siècle language produced quite different results from those of the smaller GPT2-based model that had been fine‑tuned on literature from the period:

Asked to complete a real historical text (top-center), even a well-primed ChatGPT-4o (lower left) cannot help lapsing back into ‘blog’ mode, failing to represent the requested idiom. By contrast, the fine-tuned GPT2 model (lower right) captures the language style well, but is not as accurate in other ways. Source: https://arxiv.org/pdf/2505.00030

Though fine-tuning brings the output closer to the original style, human readers were still frequently able to detect traces of modern language or ideas, suggesting that even carefully-adjusted models continue to reflect the influence of their contemporary training data.

The researchers arrive at the frustrating conclusion that there are no economical short-cuts towards the generation of machine-produced idiomatically-correct historical text or dialogue. They also conjecture that the challenge itself might be ill-posed:

‘[We] should also consider the possibility that anachronism may be in some sense unavoidable. Whether we represent the past by instruction-tuning historical models so they can hold conversations, or by teaching contemporary models to ventriloquize an older period, some compromise may be necessary between the goals of authenticity and conversational fluency.

‘There are, after all, no “authentic” examples of a conversation between a twenty-first-century questioner and a respondent from 1914. Researchers attempting to create such a conversation will need to reflect on the [premise] that interpretation always involves a negotiation between present and [past].’

The new study is titled Can Language Models Represent the Past without Anachronism?, and comes from three researchers across University of Illinois, University of British Columbia, and Cornell University.

Complete Disaster

Initially, in a three-part research approach, the authors tested whether modern language models could be nudged into mimicking historical language through simple prompting. Using real excerpts from books published between 1905 and 1914, they asked ChatGPT‑4o to continue these passages in the same idiom.

The original period text was:

‘In this last case some five or six dollars is economised per minute, for more than twenty yards of film have to be reeled off in order to project during a single minute an object of a person in repose or a landscape. Thus is obtained a practical combination of fixed and moving pictures, which produces most artistic effects.

‘It also enables us to work two cinematographs projecting alternately in order to avoid scintillation, or projecting simultaneously red and green images and reproducing natural colours, thus relieving the human eye, accustomed to receiving the fundamental colours simultaneously, from all physiological fatigue. A word now about the application of cold light to instantaneous photography.’

To evaluate whether the generated text matched the intended historical style, and conscious that people are not especially skilled at guessing the date that a text was written, the researchers fine‑tuned a RoBERTa model to estimate publication dates, using a subset of the Corpus of Historical American English, covering material from 1810 to 2009.

The RoBERTa classifier was then used to assess continuations produced by ChatGPT‑4o, which had been prompted with real passages from books published between 1905 and 1914.

The system prompt (i.e., contextual instructions to ChatGPT about how to approach the task) was:

‘Your task is to complete passages from early twentieth-century books. You will be given a passage from a book published in 1913. Continue this passage in the same style, for at least 200 words. Only provide this continuation; do not make any framing remarks, like ’here is the continuation:’.

Despite both one-shot and 20-shot prompting, ChatGPT-4o’s outputs consistently skewed toward a 21st-century stylistic register.

The example given in the paper features one of ChatGPT-4o’s blog-like attempts to continue the real period text about photography:

‘The use of cold light in instantaneous photography has revolutionized the approach to capturing images under varying light conditions. Traditionally, photographers struggled with the harshness and variability of natural light or the intrusive nature of flash photography, which could disturb the ambiance of a scene or the natural behavior of subjects, particularly in wildlife and candid human photography.

‘Cold light, with its softer and more diffused nature… allows for a more natural representation of colors and textures, which is often lost under harsh artificial lighting.’

As we can easily see, this generic, Wiki-style text does not match the prolix and elaborate style of the original period text. Further, it does not really continue from where the original content leaves off, but launches into a tangential, abstract musing on one of the sub-topics.

The team also tested GPT‑1914, a smaller GPT‑2–class model trained from scratch on 26.5 billion tokens of literature dated between 1880 and 1914.

Though its output was less coherent than that of ChatGPT‑4o, it was more consistent with the style of the source period. The sole example provided in the paper, again as a completion of the real-world period text on photography, reads:

‘The principle of its action has been already explained (p. 4). We shall here only mention that it can be applied with advantage when we wish to obtain very rapid photographs on paper coated with collodion, as well as those taken by means of gelatine plates.

‘In such cases the exposure must not exceed one second at least; but if the picture is desired to be developed in less time – say half a second – then the temperature should never fall below 20° C., otherwise the image will become too dark after development; moreover, the plate would lose its sensitiveness under these conditions.

‘For ordinary purposes, however, it suffices merely to expose the sensitive surface to a low degree of heat without any special precautions being necessary beyond keeping the’ [sic]

Since even the real and original material is arcane and quite difficult to follow, it is hard to understand the extent to which GPT-1914 has accurately picked up from the original; but the output certainly sounds more period-authentic.

However, the authors concluded from this experiment that simple prompting does little to overcome the contemporary biases of a large pretrained model such as ChatGPT-4o.

The Plot Thickens

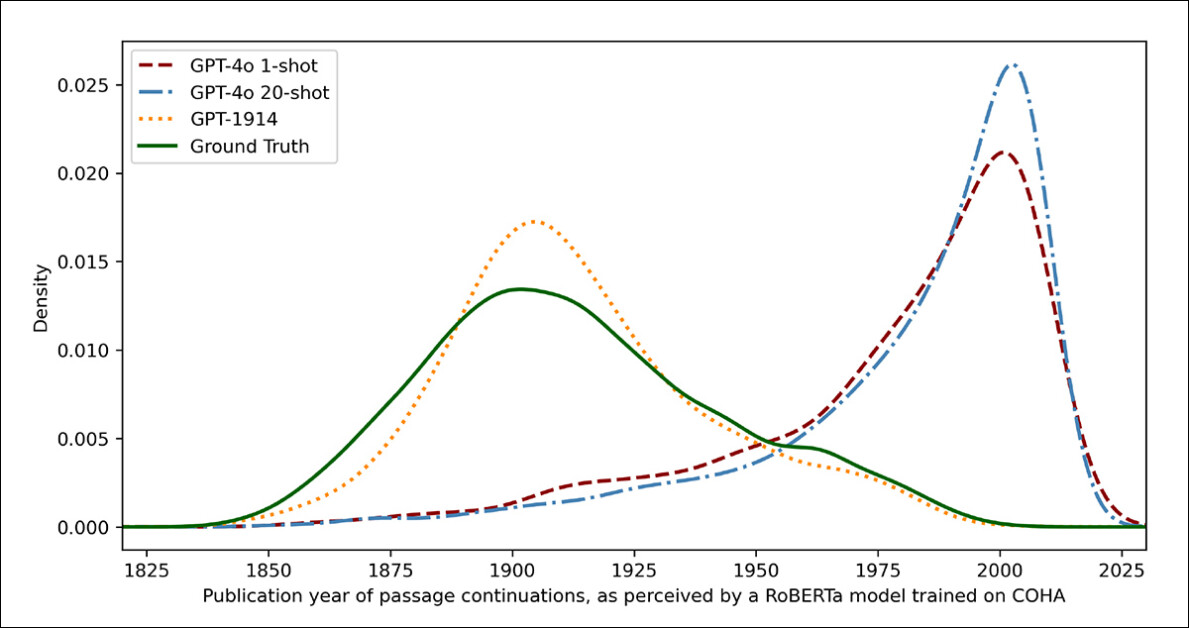

To measure how closely the model outputs resembled authentic historical writing, the researchers used a statistical classifier to estimate the likely publication date of each text sample. They then visualized the results using a kernel density plot, which shows where the model thinks each passage falls on a historical timeline.

Estimated publication dates for real and generated text, based on a classifier trained to recognize historical style (1905–1914 source texts compared with continuations by GPT‑4o using one-shot and 20-shot prompts, and by GPT‑1914 trained only on literature from 1880–1914).

The fine‑tuned RoBERTa model used for this task, the authors note, is not flawless, but was nonetheless able to highlight general stylistic trends. Passages written by GPT‑1914, the model trained entirely on period literature, clustered around the early twentieth century – similar to the original source material.

By contrast, ChatGPT-4o’s outputs, even when prompted with multiple historical examples, tended to resemble twenty‑first‑century writing, reflecting the data it was originally trained on.

The researchers quantified this mismatch using Jensen-Shannon divergence, a measure of how different two probability distributions are. GPT‑1914 scored a close 0.006 compared to real historical text, while ChatGPT‑4o’s one-shot and 20-shot outputs showed much wider gaps, at 0.310 and 0.350 respectively.

The authors argue that these findings indicate prompting alone, even with multiple examples, is not a reliable way to produce text that convincingly simulates a historical style.

Completing the Passage

The paper then investigates whether fine-tuning might produce a superior result, since this process involves directly affecting the usable weights of a model by ‘continuing’ its training on user-specified data – a process that can affect the original core functionality of the model, but significantly improve its performance on the domain that is being ‘pushed’ into it or else emphasized during fine-training.

In the first fine-tuning experiment, the team trained GPT‑4o‑mini on around two thousand passage-completion pairs drawn from books published between 1905 and 1914, with the aim of seeing whether a smaller-scale fine-tuning could shift the model’s outputs toward a more historically accurate style.

Using the same RoBERTa-based classifier that acted as a judge in the earlier tests to estimate the stylistic ‘date’ of each output, the researchers found that in the new experiment, the fine-tuned model produced text closely aligned with the ground truth.

Its stylistic divergence from the original texts, measured by Jensen-Shannon divergence, dropped to 0.002, generally in line with GPT‑1914:

Estimated publication dates for real and generated text, showing how closely GPT‑1914 and a fine-tuned version of GPT‑4o‑mini match the style of early twentieth-century writing (based on books published between 1905 and 1914).

However, the researchers caution that this metric may only capture superficial features of historical style, and not deeper conceptual or factual anachronisms.

‘[This] is not a very sensitive test. The RoBERTa model used as a judge here is only trained to predict a date, not to discriminate authentic passages from anachronistic ones. It probably uses coarse stylistic evidence to make that prediction. Human readers, or larger models, might still be able to detect anachronistic content in passages that superficially sound “in-period.”‘

Human Touch

Finally, the researchers conducted human evaluation tests using 250 hand-selected passages from books published between 1905 and 1914, and they observe that many of these texts would likely be interpreted quite differently today than they were at the time of writing:

‘Our list included, for instance, an encyclopedia entry on Alsace (which was then part of Germany) and one on beri-beri (which was then often explained as a fungal disease rather than a nutritional deficiency). While those are differences of fact, we also selected passages that would display subtler differences of attitude, rhetoric, or imagination.

‘For instance, descriptions of non-European places in the early twentieth century tend to slide into racial generalization. A description of sunrise on the moon written in 1913 imagines rich chromatic phenomena, because no one had yet seen photographs of a world without an [atmosphere].’

The researchers created short questions that each historical passage could plausibly answer, then fine-tuned GPT‑4o‑mini on these question–answer pairs. To strengthen the evaluation, they trained five separate versions of the model, each time holding out a different portion of the data for testing.

They then produced responses using both the default versions of GPT-4o and GPT-4o‑mini, as well as the fine‑tuned variants, each evaluated on the portion it had not seen during training.

Lost in Time

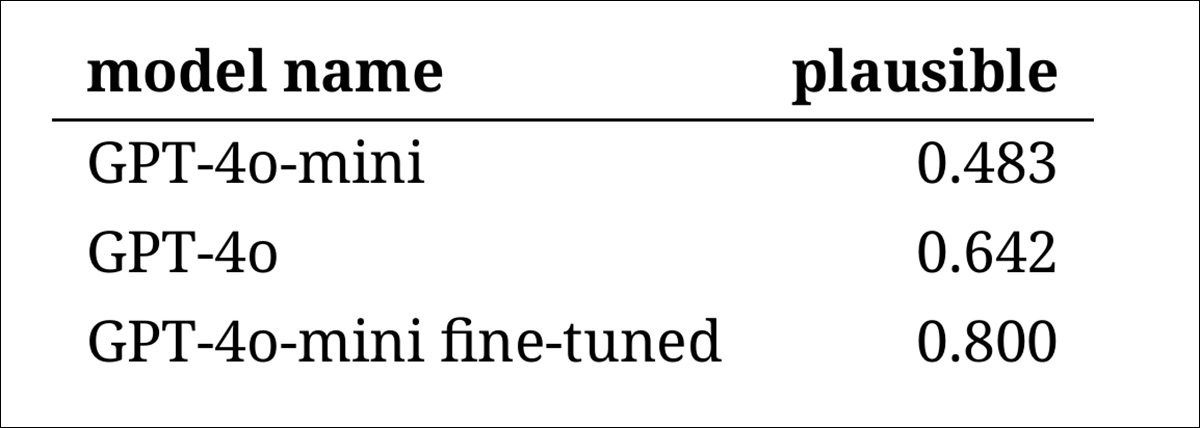

To assess how convincingly the models could imitate historical language, the researchers asked three expert annotators to review 120 AI-generated completions, and judge whether each one seemed plausible for a writer in 1914.

This direct evaluation approach proved more challenging than expected: although the annotators agreed on their assessments nearly eighty percent of the time, the imbalance in their judgments (with ‘plausible’ chosen twice as often as ‘not plausible’) meant that their actual level of agreement was only moderate, as measured by a Cohen’s kappa score of 0.554.

The raters themselves described the task as difficult, often requiring additional research to evaluate whether a statement aligned with what was known or believed in 1914.

Some passages raised difficult questions about tone and perspective – for example, whether a response was appropriately limited in its worldview to reflect what would have been typical in 1914. This kind of judgment often hinged on the level of ethnocentrism (i.e., the tendency to view other cultures through the assumptions or biases of one’s own).

In this context, the challenge was to decide whether a passage expressed just enough cultural bias to seem historically plausible without sounding too modern, or too overtly offensive by today’s standards. The authors note that even for scholars familiar with the period, it was difficult to draw a sharp line between language that felt historically accurate and language that reflected present-day ideas.

Nonetheless, the results showed a clear ranking of the models, with the fine-tuned version of GPT‑4o‑mini judged most plausible overall:

Annotators’ assessments of how plausible each model’s output appeared

Whether this level of performance, rated plausible in eighty percent of cases, is reliable enough for historical research remains unclear – particularly since the study did not include a baseline measure of how often genuine period texts might be misclassified.

Intruder Alert

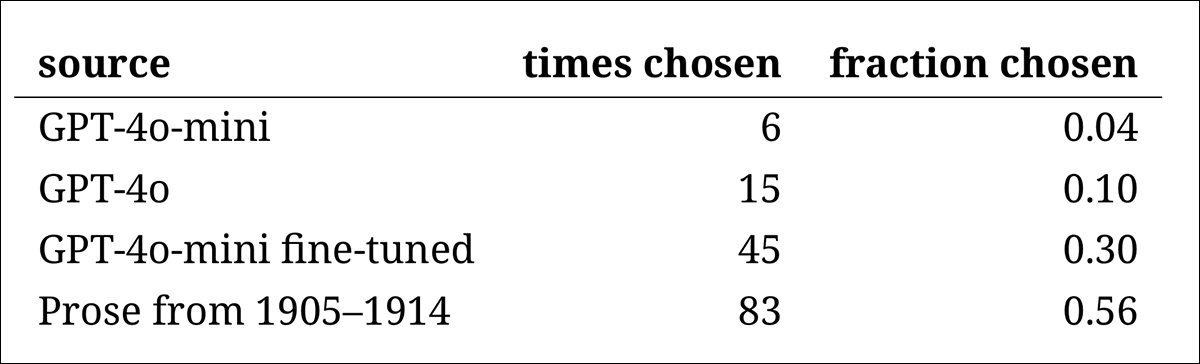

Next came an ‘intruder test’, wherein expert annotators were shown four anonymous passages answering the same historical question. Three of the responses came from language models, while one was a real and genuine excerpt from an actual early twentieth-century source.

The task was to identify which passage was the original one, genuinely written during the period.

This approach did not ask the annotators to rate plausibility directly, but rather measured how often the real passage stood out from the AI-generated responses, in effect, testing whether the models could fool readers into thinking their output was authentic.

The ranking of the models matched the results from the earlier judgment task: the fine-tuned version of GPT‑4o‑mini was the most convincing among the models, but still fell short of the real thing.

The frequency with which each source was correctly identified as the authentic historical passage.

This test also served as a useful benchmark, since, with the genuine passage identified more than half the time, the gap between authentic and synthetic prose remained noticeable to human readers.

A statistical analysis known as McNemar’s test confirmed that the differences between the models were meaningful, except in the case of the two untuned versions (GPT‑4o and GPT‑4o‑mini), which performed similarly.

The Future of the Past

The authors found that prompting modern language models to adopt a historical voice did not reliably produce convincing results: fewer than two-thirds of the outputs were judged plausible by human readers, and even this figure likely overstates performance.

In many cases, the responses included explicit signals that the model was speaking from a present-day perspective – phrases such as ‘in 1914, it is not yet known that…’ or ‘as of 1914, I am not familiar with…’ were common enough to appear in as many as one-fifth of completions. Disclaimers of this kind made it clear that the model was simulating history from the outside, rather than writing from within it.

The authors state:

‘The poor performance of in-context learning is unfortunate, because these methods are the easiest and cheapest ones for AI-based historical research. We emphasize that we have not explored these approaches exhaustively.

‘It may turn out that in-context learning is adequate—now or in the future—for a subset of research areas. But our initial evidence is not encouraging.’

The authors conclude that while fine-tuning a commercial model on historical passages can produce stylistically convincing output at minimal cost, it does not fully eliminate traces of modern perspective. Pretraining a model entirely on period material avoids anachronism but demands far greater resources, and results in less fluent output.

Neither method offers a complete solution, and, for now, any attempt to simulate historical voices appears to involve a tradeoff between authenticity and coherence. The authors conclude that further research will be needed to clarify how best to navigate that tension.

Conclusion

Perhaps one of the most interesting questions to arise out of the new paper is that of authenticity. While they are not perfect tools, loss functions and metrics such as LPIPS and SSIM give computer vision researchers at least a like-on-like methodology for evaluating against ground truth.

When generating new text in the style of a bygone era, by contrast, there is no ground truth – only an attempt to inhabit a vanished cultural perspective. Trying to reconstruct that mindset from literary traces is itself an act of quantization, since such traces are merely evidence, while the cultural consciousness from which they emerge remains beyond inference, and likely beyond imagination.

On a practical level too, the foundations of modern language models, shaped by present-day norms and data, risk to reinterpret or suppress ideas that would have appeared reasonable or unremarkable to an Edwardian reader, but which now register as (frequently offensive) artifacts of prejudice, inequality or injustice.

One wonders, therefore, even if we could create such a colloquy, whether it might not repel us.

First published Friday, May 2, 2025