OpenAI’s $200 ChatGPT Pro: The AI That Thinks Harder (But Do You Need It?)

OpenAI just introduced what they’re calling their “world’s smartest model.” It comes with a monthly price tag of $200 and promises to think harder, work longer, and solve more complex problems than anything we’ve seen before. But in a world where AI announcements seem to be dropping every week, this deserves a closer look.

The new one ChatGPT Promade possible by the o1 model, is not just a regular upgrade. While regular ChatGPT has become the Swiss army knife of AI tools, this new offering is more like specialized surgical equipment – incredibly powerful, but not for everyone.

What o1 really has to offer

Let’s cut through the hype and look at what makes o1 different. The model shows some impressive numbers, but the key is where these improvements actually make a difference.

In real-world testing, o1 shows improvements in three key areas:

- In-depth technical troubleshooting: The model achieves 50% accuracy on AIME 2024 math competition problems – an increase from 37% in previous versions. But more importantly, this performance is maintained consistently. When tested for reliability (providing the correct answer 4 out of 4 times), the o1 pro mode significantly outperforms its predecessors.

- Scientific reasoning: On PhD-level scientific questions, o1 shows a 74% success rate, with even more impressive gains in consistency. What’s interesting is how this translates to real research applications – we see researchers using it to design advanced biological experiments.

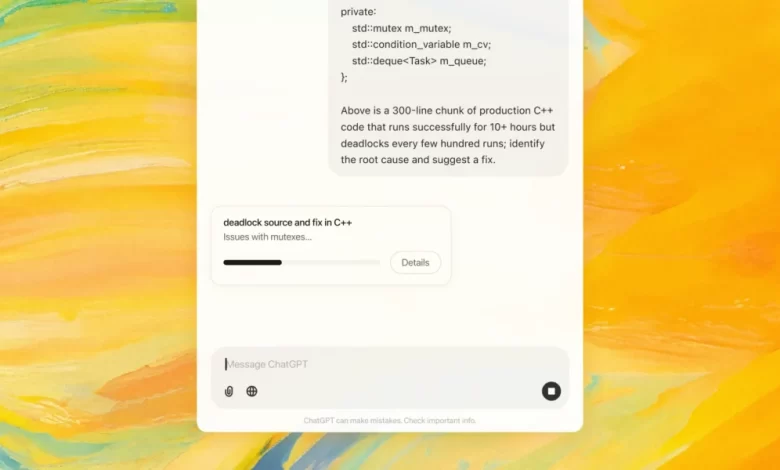

- Programming and technical analysis: Perhaps most telling, o1 achieves a 62% pass rate on advanced programming challenges, demonstrating particular strength in solving complex, multi-step problems. But – and this is crucial – it actually struggles with simpler, iterative tasks that require back-and-forth conversations.

Image: OpenAI

The real innovation here is not just the sheer performance, but also the reliability. When the model has to think harder about a problem, it actually does so, taking more time to process and validate the answers.

But there’s a kicker: all this extra “thinking” comes with compromises. The model is significantly slower and sometimes takes significantly longer to generate responses. And for many everyday tasks, this extra power is not only unnecessary, it can even be counterproductive.

What happens with so much computing power?

Let’s talk about what actually happens when you boost an AI with more computing power. Forget the marketing talk: what we’re seeing with o1 is fascinating because it completely changes the way we think about AI support.

Think of it as the difference between a quick conversation with a colleague and an in-depth strategy session. The standard AI models are great for quick chats: they’re snappy, helpful, and get the job done. But o1? It’s like having a senior expert who takes his time, thinks carefully and sometimes comes back with insights you hadn’t even thought of yet.

What is actually revolutionary about this approach?

- Deeper ‘thinking’: When you give an AI model more time to ‘think’, it not only thinks longer, but it also thinks differently. It examines multiple perspectives and considers edge cases. This is why researchers find it particularly valuable for experimental design and hypothesis generation.

- Reliability: Here’s something no one is talking about: consistency might be o1’s real superpower. While other models can solve a complex problem once and fail the next three times, o1 shows remarkable consistency in its high-level reasoning. For professionals working on critical problems, this reliability factor is of great importance.

The Smart Buyer’s Guide to AI Power Tools

We need to have an honest conversation about that $200 price tag. Is it really worth it? Well, that completely depends on how you think about AI support in your workflow.

Interestingly, the people who could benefit most from o1 are not necessarily those working on the most complex problems; they are the ones working on problems where it is extremely costly to make mistakes. Unless you’re in specific situations like this, that extra force can actually slow you down.

Using o1 effectively requires a fundamental change in the way you approach AI interaction:

- Depth over speed

- Instead of quick back-and-forth exchanges, think of it as crafting well-thought-out research questions

- Plan for longer response times, but expect more extensive analysis

- Quality over quantity

- Focus on complex, high-value problems

- Use standard models for routine tasks

- Strategic implementation

- Combine o1 with other AI tools for an optimized workflow

- Save the heavy computing power for where it matters most

o1 doesn’t try to be everything to everyone. Instead, it forces us to think more strategically about the way we use AI tools. Perhaps the real innovation here isn’t just the technology, but the way it’s pushing us to rethink our approach to AI support.

Think of your AI toolkit as a professional kitchen. Yes, you can use the industrial equipment for anything, but master chefs know exactly when to use the fancy sous vide machine and when a simple pan will do the job better.

Before you jump into that $200 plan, try this: Keep a log of your AI interactions for a week. Point out which ones really need deeper thought instead of quick reactions. This will tell you more about whether you need o1 than any benchmark ever could.

What excites me most about o1 is not what it can do today, but what it tells us about tomorrow. We see AI evolving, from a tool that tries to do everything to a tool that knows exactly what it is best at.

Whether you jump on the o1 bandwagon or not, one thing is certain: the way we think about and use AI is evolving, and that’s something worth paying attention to.