The latest viral ChatGPT trend is doing ‘reverse location search’ from photos

There is a somewhat concerning new trend that becomes viral: people use chatgpt to find out the location in photos.

This week, OpenAi released its latest AI models, O3 and O4-Mini, both of which can “reason” “reason” through uploaded images. In practice, the models can crop, rotate and zoom in on photos – even blurred and distorted – to thoroughly analyze them.

These image -analyzing options, in combination with the power of the models to search on the internet, ensure a powerful tool for finding locations. Users on X quickly discovered that O3 is particularly good in distracting towns” orientation pointsAnd even restaurants and bars of subtle visual instructions.

Wow, nailed and not even a tree in sight. pic.twitter.com/BVCoe1FQ0Z

– Swax (@swax) April 17, 2025

In many cases, the models do not seem to draw on ‘memories’ of earlier chatgpt conversations, or EXIF dataThat is the metadata that are linked to photos that reveal details, such as where the photo was taken.

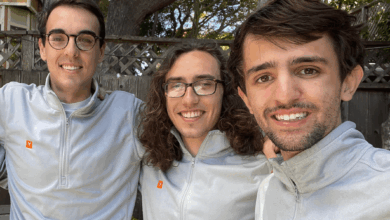

X is filled with examples of users who give chatgpt Restaurant menus” Neighbor” fallenAnd self -portraitsAnd instructing O3 to imagine that the ‘geoguessr’ plays, an online game that challenges players to guess locations of Google Street View images.

This is a nice chatgpt -o3 function. Geoguessr! pic.twitter.com/hrcmixs8yd

– Jason Barnes (@Vyrotek) April 17, 2025

It is a clear potential privacy problem. There is nothing that a bad actor prevents the Instagram story from a person to be told and chatgpt used to try to kill them.

O3 is insane

I asked a friend of mine to give me a random photo

They gave me a random photo they took in a library

O3 knows in 20 seconds and it is right pic.twitter.com/0k8dxifkoy– Yumi (@izyuuuumi) April 17, 2025

Of course this can even be done before the launch of O3 and O4-Mini. TechCrunch has performed a number of photos via O3 and an older model without image-traveling possibilities, GPT-4O, to compare the location-Guesing skills of the models. Surprisingly, GPT-4O came to the same, correct answer as O3 more often than non-EN took less time.

There was at least one copy during our short tests when O3 found a place GPT-4O could not. Given a photo of a purple, mounted rhino head in a poorly lit bar, O3 answered correctly that it was not from a Williamsburg Speakeasy, as GPT-4O guessed, a British pub.

That does not mean that O3 is flawless in this respect. Several of our tests failed – O3 got stuck in a loop, unable to come to an answer where it was reasonably confidence or volunteered at a wrong location. Users on X have also noticed that O3 can be very far away In his location deduction.

But the trend illustrates some of the emerging risks that are presented by more capable, so-called reasoning AI models. There seem to be few guarantees to prevent this kind of “reverse location -lookup” in chatgpt, and OpenAi, the company behind Chatgpt, does not deal with the problem Safety report For O3 and O4-Mini.

We have contacted OpenAI for comments. We will update our piece if they respond.

Updated 10:19 pm Pacific: Hours after this story was published, a spokesperson for OpenAi WAN sent the following statement:

“OpenAI O3 and O4-Mini bring visual reasoning to chatgpt, making it more useful in areas such as accessibility, research or identification of locations in emergency aid. We have worked to train our models to refuse requests for private or sensitive information, meant to prohibit the model to make the model in images in images and actively against and actively against images and actively against User assignments on privacy. ”